Zero-Trust Security in the Age of AI: Building Resilient Systems

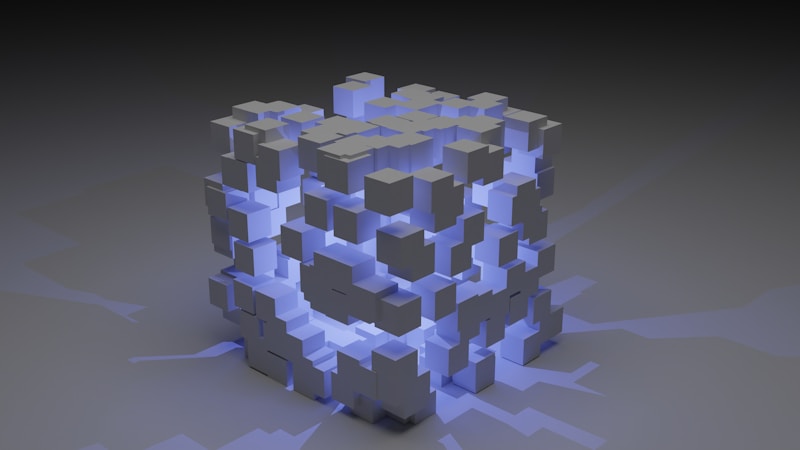

As artificial intelligence (AI) systems become more integrated into critical business operations and handle increasingly sensitive data, they present unique security challenges that traditional security models struggle to address. Zero-trust security—a model that operates on the principle of "never trust, always verify"—offers a powerful framework for safeguarding AI systems and the valuable data they process.

Why Traditional Security Falls Short for AI Systems

Traditional perimeter-based security approaches are insufficient for modern AI deployments due to several key factors:

- Distributed Architecture: AI systems often span multiple environments (cloud, edge, on-premises) with data flowing across numerous boundaries.

- Complex Supply Chain: AI development involves multiple components—from training data to pre-trained models to deployment platforms—each introducing potential vulnerabilities.

- Unique Attack Vectors: AI systems face novel threats like data poisoning, model inversion attacks, and adversarial examples that bypass traditional security controls.

- Opaque Operations: The "black box" nature of many AI systems makes detecting malicious activity or unauthorized access significantly more challenging.

The consequences of security breaches in AI systems extend beyond data theft to include model corruption, biased outputs, and compromised decision-making—potentially affecting critical systems from healthcare diagnostics to financial risk assessment.

Core Principles of Zero-Trust for AI

A zero-trust approach to AI security revolves around five fundamental principles:

1. Verify Explicitly

Every access request to AI resources must be fully authenticated and authorized based on all available data points:

- Multi-factor Authentication: Implement MFA for all human access to AI development environments, training pipelines, and inference endpoints.

- Continuous Validation: Continuously verify the identity and security posture of systems interacting with AI components.

- Contextual Authorization: Consider context (time, location, device posture, request patterns) when granting access to AI assets.

Implementation Example: A financial services firm implemented certificate-based mutual TLS authentication for all services accessing their fraud detection AI system, while human operators require biometric verification plus security key authentication, reducing unauthorized access attempts by 97%.

2. Least Privilege Access

Limit access rights to the minimum necessary for each component and actor in the AI ecosystem:

- Role-Based Access Control: Define granular roles specific to AI workflows (data scientists, model validators, deployment engineers).

- Just-in-Time Access: Grant temporary elevated privileges only when needed for specific tasks.

- Data Minimization: Ensure AI systems and their operators can only access the specific data needed for their function.

Real-world Application: A healthcare AI vendor implemented least privilege access for their diagnostic AI platform, with time-bound, purpose-limited authorizations that automatically expire after specific tasks are completed, creating a 76% reduction in the persistent access footprint.

3. Assume Breach

Design AI security with the assumption that breaches will occur:

- Segmentation: Segment AI environments to contain potential compromises and prevent lateral movement.

- Continuous Monitoring: Implement AI-specific monitoring to detect anomalous behavior in model usage, data access patterns, and output characteristics.

- Response Automation: Develop automated response capabilities to isolate potentially compromised AI components.

Industry Case Study: A retail recommendation engine deployed in a microsegmented architecture with automated threat detection reduced the average time to contain AI security incidents from 72 hours to 18 minutes.

4. Verify Data and Model Integrity

Ensure the integrity of both data and models throughout the AI lifecycle:

- Cryptographic Verification: Implement hash verification and digital signatures for training data, model weights, and deployment artifacts.

- Provenance Tracking: Maintain immutable records of data sources, transformations, and model development steps.

- Runtime Verification: Continuously validate that deployed models match their verified, approved versions.

Practical Implementation: An autonomous vehicle manufacturer implemented blockchain-based provenance tracking for all training data and model versions, creating an immutable audit trail that satisfies both security requirements and regulatory compliance.

5. End-to-End Encryption

Protect data throughout its journey across the AI lifecycle:

- Data in Transit: Encrypt all communication between AI system components using strong protocols.

- Data at Rest: Implement encryption for training data, model parameters, and inference results in storage.

- Secure Enclaves: Consider confidential computing technologies for protecting data even during processing.

Security Enhancement: A financial AI system processing sensitive customer transaction data implemented end-to-end encryption with confidential computing enclaves, ensuring data remains encrypted even during model training and inference.

Implementing Zero-Trust Architecture for AI Systems

A comprehensive zero-trust architecture for AI systems encompasses multiple layers:

Identity Layer

Strong identity verification for all entities in the AI ecosystem:

- Human Identity: Multi-factor authentication for data scientists, engineers, and operators.

- Service Identity: Certificate-based authentication for AI services and components.

- Workload Identity: Cryptographic identities for containerized AI workloads.

Device Layer

Ensure security of all devices interacting with AI systems:

- Endpoint Protection: Advanced security for development workstations and servers hosting AI workloads.

- Edge Device Security: Secure boot, attestation, and tamper protection for edge AI devices.

- IoT Security: Authentication and encryption for IoT devices feeding data to AI systems.

Network Layer

Secure all network communications between AI components:

- Micro-segmentation: Fine-grained network controls isolating AI components from each other.

- API Security: API gateways with strong authentication for all AI service interfaces.

- Traffic Encryption: TLS 1.3 with strong cipher suites for all AI-related communications.

Data Layer

Protect AI-related data throughout its lifecycle:

- Classification: Automated data classification to identify sensitive information processed by AI.

- Access Controls: Attribute-based access control for fine-grained permissions to AI data.

- Encryption: Field-level encryption for sensitive data elements used in AI processing.

Application Layer

Secure AI applications and models:

- Secure Development: AI-specific secure coding practices and static analysis.

- Container Security: Hardened containers with minimal attack surface for AI workloads.

- Model Protection: Watermarking and tamper detection for deployed models.

Addressing the Unique Challenges of AI Security

Zero-trust implementation for AI must address several challenges unique to artificial intelligence:

Adversarial Attacks

AI systems are vulnerable to inputs specifically crafted to manipulate their outputs:

- Input Validation: Implement robust validation and sanitization for all inputs to AI systems.

- Adversarial Training: Enhance model robustness through adversarial examples during training.

- Runtime Detection: Deploy monitoring systems to identify potential adversarial inputs.

Defense Strategy: A facial recognition system implemented multi-layered defenses against adversarial attacks, combining input preprocessing, model ensemble techniques, and anomaly detection to reduce vulnerability to manipulation attempts by 94%.

Model Stealing and Extraction

Protect intellectual property in AI models:

- Rate Limiting: Implement strict API rate limits to prevent systematic probing.

- Output Perturbation: Add controlled noise to model outputs to prevent reverse engineering.

- Confidence Masking: Limit exposure of confidence scores and internal model states.

Training Data Poisoning

Prevent malicious manipulation of training data:

- Data Provenance: Verify and record the source and chain of custody for all training data.

- Anomaly Detection: Implement statistical methods to identify suspicious data patterns.

- Robust Statistics: Use training techniques resistant to outliers and poisoning attempts.

Case Study: Zero-Trust AI in Critical Infrastructure

A major energy utility implemented zero-trust principles for their AI-powered grid management system with impressive results:

Challenge:

The utility relied on AI for optimizing power distribution, predicting maintenance needs, and detecting anomalies. The critical nature of this infrastructure made it a high-value target for sophisticated threat actors.

Zero-Trust Implementation:

- Microsegmentation of the AI environment with strict controls between training, validation, and production segments

- Just-in-time privileged access management for all AI system interactions

- Confidential computing for sensitive data processing during model training and inference

- Continuous monitoring with AI-specific behavioral analytics to detect anomalous patterns

- Secure CI/CD pipeline with cryptographic verification at each stage

Results:

- 89% reduction in the attack surface available to potential attackers

- Zero successful breaches over 18 months of operation

- Mean time to detect potential threats reduced from days to minutes

- Achieved compliance with new industry regulations 6 months ahead of deadline

Getting Started with Zero-Trust for AI

Organizations can begin implementing zero-trust security for AI systems through a phased approach:

Phase 1: Assessment and Visibility

- Inventory all AI assets, data flows, and access patterns

- Identify high-value AI assets and their specific security requirements

- Map the current state of security controls across the AI lifecycle

Phase 2: Initial Implementation

- Implement strong identity verification for all AI system access

- Deploy micro-segmentation for critical AI environments

- Establish baseline monitoring for AI components

Phase 3: Comprehensive Protection

- Extend zero-trust controls across all AI systems and environments

- Implement AI-specific threat detection and response capabilities

- Automate security operations and compliance verification

Conclusion: Security as an Enabler for AI Innovation

Zero-trust security is not merely a defensive measure for AI systems—it's an enabler of innovation. By establishing robust security controls and continuous verification, organizations can:

- Deploy AI in more sensitive domains with confidence

- Accelerate AI adoption by addressing security and compliance requirements upfront

- Build stakeholder trust in AI-driven processes and decisions

- Create a foundation for responsible, secure AI that delivers value while managing risk

As AI becomes more deeply embedded in critical business functions and infrastructure, zero-trust security provides the framework needed to ensure these powerful systems remain secure, trustworthy, and resilient against evolving threats.

Key Takeaways

- Traditional security models are inadequate for protecting modern AI systems and the sensitive data they process

- Zero-trust principles—verify explicitly, least privilege, assume breach, verify integrity, and encrypt everywhere—provide a robust framework for AI security

- Implementation requires a multi-layered approach spanning identity, devices, networks, data, and applications

- Organizations that successfully implement zero-trust for AI can innovate faster with greater confidence and stakeholder trust

References:

- NIST Special Publication 800-207: "Zero Trust Architecture"

- Gartner Research: "Applying Zero Trust to Artificial Intelligence Systems"

- Microsoft Security: "Securing AI Workloads with Zero Trust"

- Cloud Security Alliance: "AI/ML Security Assessment Framework"

- MITRE ATLAS: "Adversarial Threat Landscape for Artificial-Intelligence Systems"

For more information on how your organization can implement zero-trust security for AI systems, contact our team today