Building Scalable ML Pipelines: Best Practices for 2025

Machine learning has evolved from experimental projects to critical production systems that drive business value across industries. However, the path from developing ML models to deploying and maintaining them at scale remains challenging. In this article, we'll explore the latest best practices for building scalable, robust, and efficient machine learning pipelines that can withstand the demands of enterprise environments.

Understanding the ML Lifecycle

Modern ML pipelines extend far beyond just model training. A comprehensive ML pipeline encompasses multiple stages:

- Data Ingestion & Validation: Collecting, validating, and preparing data for processing

- Data Preprocessing: Cleaning, transforming, and feature engineering

- Model Development: Training, testing, and validating ML models

- Model Deployment: Packaging, serving, and integrating models

- Monitoring & Maintenance: Tracking performance, detecting drift, and retraining

Each stage presents unique challenges that must be addressed to create a truly scalable system.

Key Principles for Scalable ML Pipelines

1. Automation is Non-Negotiable

Manual processes are the enemy of scale. According to a recent McKinsey study, data scientists spend up to 70% of their time on data preparation tasks that could be automated. Modern ML pipelines should automate:

- Data collection and preprocessing

- Feature engineering and selection

- Model training and evaluation

- Testing and validation

- Deployment and monitoring

Implementation Tip: Use orchestration tools like Apache Airflow, Kubeflow, or Dagster to create reproducible, scheduled workflows that minimize human intervention.

2. Embrace Containerization and Microservices

Containerization has revolutionized ML deployment by providing consistent environments across development and production stages:

Benefits:

- Environment consistency

- Scalable resource allocation

- Isolated dependencies

- Simplified deployment across cloud providers

Implementation Tip: Use Docker for containerizing ML components and Kubernetes for orchestration. Structure your ML pipeline as a series of microservices, each handling specific tasks like feature extraction, model training, or inference.

For example, a Docker Compose setup for a modular ML pipeline might include services for data processing, model training, and inference API, each with their own resources and dependencies.

3. Implement Robust Data Versioning

Data changes over time, and models trained on different data versions may produce different results. A scalable ML pipeline requires:

- Data versioning for input datasets

- Version control for feature engineering code

- Lineage tracking to connect models with their training data

Implementation Tip: Tools like DVC (Data Version Control), Pachyderm, or MLflow can help manage data versions. Always maintain metadata about which data versions were used to train specific model versions.

4. Prioritize Model Versioning and Registry

As your ML operations scale, you'll likely have multiple models in various stages of development and deployment. A model registry is essential for:

- Tracking model versions

- Storing model metadata

- Managing model lifecycle

- Facilitating A/B testing and rollbacks

Implementation Tip: Use MLflow, Weights & Biases, or cloud-specific solutions like AWS SageMaker Model Registry to organize your models. Each model should be tagged with performance metrics, training parameters, and dataset versions.

5. Adopt Feature Stores for Production

Feature stores have emerged as a critical component of scalable ML pipelines, providing:

- Centralized feature computation and storage

- Consistency between training and serving

- Reusability of features across models

- Reduced feature engineering overhead

Implementation Tip: Consider using Feast, Tecton, or Amazon SageMaker Feature Store to implement a feature store. Design your feature pipeline to compute features once and reuse them across multiple models.

A feature store implementation might include entity definitions, feature views with appropriate time-to-live settings, and connections to source data systems.

Architecture Patterns for Scale

The Inner-Middle-Outer Loop Architecture

A modern approach to ML pipelines involves organizing workflows into three distinct loops:

1. Inner Loop: Experimentation

- Focus: Rapid iteration and exploration

- Tools: Jupyter Notebooks, quick experimentation frameworks

- Users: Data scientists and ML researchers

- Goals: Feature engineering, model selection, hyperparameter tuning

2. Middle Loop: Validation and Testing

- Focus: Rigorous validation of promising models

- Tools: CI/CD pipelines, automated testing frameworks

- Users: ML engineers and DevOps teams

- Goals: Performance benchmarking, bias testing, security validation

3. Outer Loop: Production and Monitoring

- Focus: Deployment, monitoring, and retraining

- Tools: Monitoring platforms, feature stores, model registries

- Users: Operations teams, business stakeholders

- Goals: Model serving, performance monitoring, drift detection

This architecture enables different teams to work concurrently while maintaining a clear path from experimentation to production.

Implementing Hybrid Cloud Pipelines

Modern ML pipelines often span multiple environments:

- On-premises for sensitive data processing

- Public cloud for scalable training

- Edge devices for local inference

Implementation Tip: Use Kubernetes for consistent deployments across environments, and adopt tools like Kubeflow or MLflow that support hybrid-cloud scenarios. Define clear interfaces between components to enable portability.

Monitoring and Maintenance at Scale

Real-time Performance Monitoring

As ML systems scale, monitoring becomes increasingly critical. Key metrics to track include:

- Model Performance: Accuracy, precision, recall

- System Performance: Latency, throughput, resource utilization

- Data Quality: Distribution shifts, missing values, anomalies

Implementation Tip: Implement dashboards that visualize these metrics over time, with alerting for significant deviations. Tools like Prometheus, Grafana, or specialized ML monitoring solutions like Arize AI or Evidently AI can help.

Automated Retraining Workflows

Models inevitably degrade over time due to changing data patterns. A scalable pipeline should include:

- Automated detection of model drift

- Scheduled model evaluation

- Triggered retraining when performance drops

- Seamless model updates with validation

Implementation Tip: Implement champion-challenger patterns where new models are thoroughly validated against existing ones before deployment.

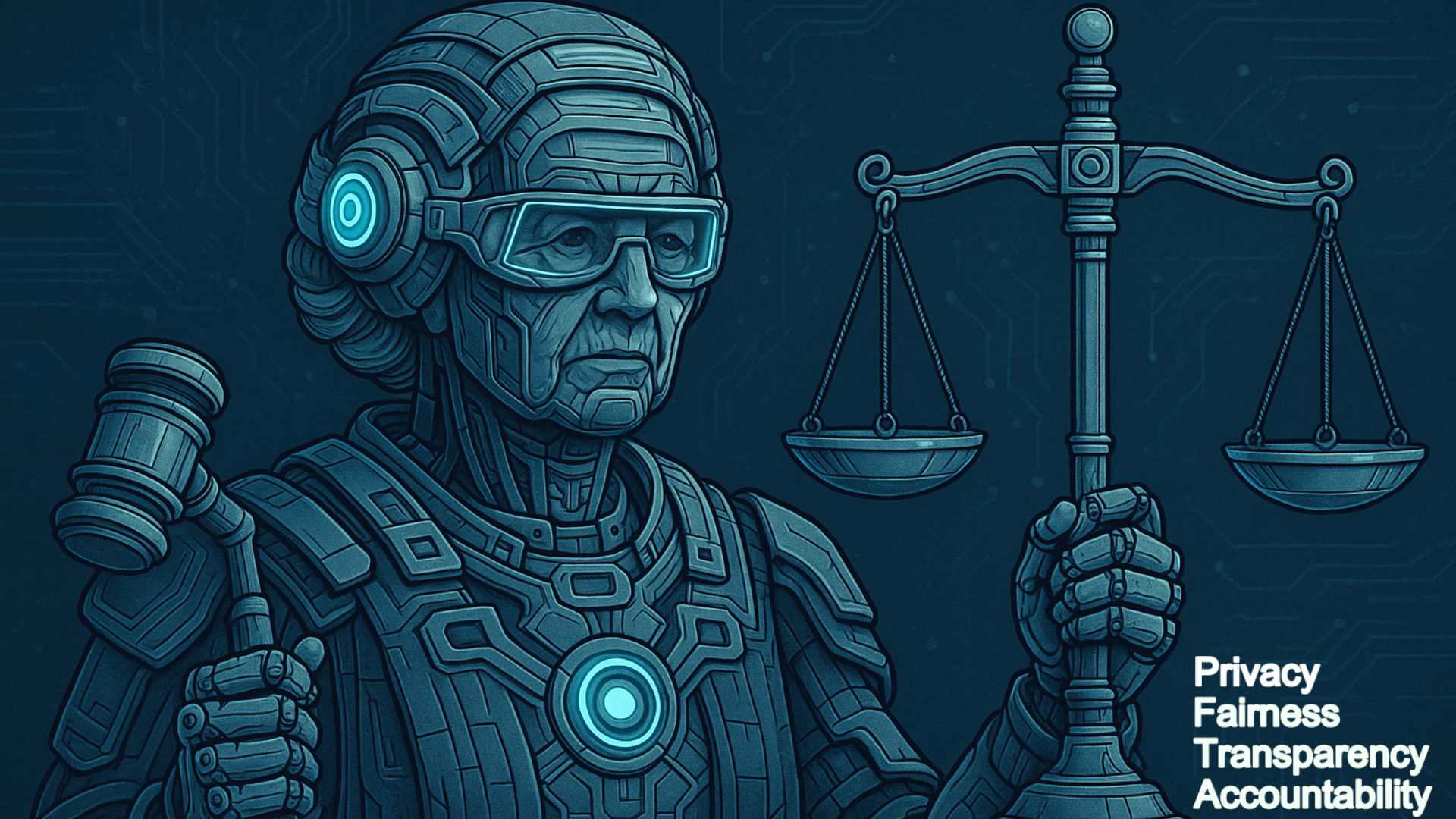

Security and Governance for ML at Scale

As ML pipelines handle increasingly sensitive data and make high-impact decisions, security and governance become paramount:

- Access Control: Implement fine-grained permissions for data and models

- Audit Trails: Log all access to data and model artifacts

- Compliance Checks: Validate models against regulatory requirements

- Ethical Monitoring: Check for bias and fairness issues

Implementation Tip: Integrate governance tools directly into your pipeline rather than treating them as separate processes. Tools like Great Expectations for data validation and Fairlearn for bias detection can be included in automated workflows.

Case Study: Scaling an E-commerce Recommendation System

A major e-commerce platform successfully scaled their recommendation system using these principles:

- Initial Challenge: Recommendations took hours to update and couldn't handle seasonal traffic spikes.

- Solution Implementation:

- Migrated from monolithic architecture to microservices

- Implemented a feature store for real-time feature computation

- Created automated model deployment with canary testing

- Built scalable inference servers with auto-scaling

- Results:

- Reduced recommendation update time from hours to minutes

- Handled 5x traffic spikes during holiday seasons without degradation

- Increased recommendation relevance by 35% through faster iteration

- Decreased infrastructure costs by 40% through better resource utilization

Future Trends in ML Pipeline Scalability

Looking ahead to the next few years, several trends will shape ML pipeline development:

1. Low-Code/No-Code ML Pipelines

Platforms that enable non-specialists to build and deploy ML models will become more sophisticated, with guardrails for production deployment.

2. Federated Learning Infrastructure

As privacy concerns increase, federated learning pipelines that train models across distributed data without centralization will become standard in sensitive domains.

3. Specialized ML Hardware Support

Pipelines will increasingly target domain-specific hardware accelerators beyond GPUs, optimizing for performance and energy efficiency.

4. Self-Healing ML Systems

Advanced monitoring will enable pipelines to automatically detect and address issues, potentially reconfiguring themselves based on detected patterns.

Conclusion: The Path Forward

Building scalable ML pipelines requires a thoughtful approach that balances technical requirements with business needs. By embracing automation, containerization, robust versioning, and modern architectural patterns, organizations can create ML systems that scale effectively and deliver consistent value.

As you embark on your ML scaling journey, remember that the most successful implementations focus not just on technology but also on the people and processes that surround it. A well-designed pipeline empowers data scientists, ML engineers, and business stakeholders to collaborate effectively toward shared goals.

Need help building scalable ML pipelines for your organization? Contact Straton AI today to learn how our expert team can accelerate your journey to production ML.