Data Security in the Age of AI: Protecting Your Most Valuable Asset

As artificial intelligence transforms business operations across industries, it introduces unique data security challenges that extend beyond traditional cybersecurity concerns. Organizations implementing AI solutions must adopt comprehensive security strategies that address the entire data lifecycle—from collection and preprocessing to model training and deployment. This article explores the critical aspects of data security in AI implementations and provides actionable strategies for protecting your most valuable asset: your data.

The Unique Data Security Challenges of AI

AI systems present several security challenges that traditional applications don't:

1. Expanded Attack Surface

AI pipelines significantly expand the attack surface of an organization by introducing:

- Data collection interfaces that may process sensitive information from multiple sources

- Storage systems containing vast amounts of training and inference data

- Model training environments that may be vulnerable during the computation-intensive training phase

- Inference endpoints accessible to users or other systems

- Monitoring and logging systems that capture model behavior and potentially sensitive inputs

Each component represents a potential entry point for attackers, requiring comprehensive security controls across the entire AI lifecycle.

2. Data Poisoning Vulnerabilities

Unlike traditional systems, AI models are vulnerable to data poisoning attacks, where adversaries manipulate training data to introduce backdoors or biases. These attacks are particularly insidious because:

- They can be difficult to detect through conventional security monitoring

- Effects may not be apparent until the model is deployed in production

- The compromise can persist across model updates if poisoned data remains in training sets

According to recent security research, just a 3% poisoning of training data can reduce model accuracy by up to 91% in targeted scenarios, or introduce persistent backdoors that bypass security controls.

3. Model Extraction and Inversion Risks

AI models themselves represent valuable intellectual property and can be vulnerable to:

- Model extraction: Where attackers query an AI system to replicate its functionality

- Model inversion: Where attackers reconstruct training data by analyzing model outputs

- Membership inference: Where attackers determine if specific data was used in training

These attacks can lead to intellectual property theft, privacy violations, and regulatory compliance issues, particularly in industries handling sensitive personal data.

Data Security Framework for AI Systems

Addressing these challenges requires a comprehensive security framework that spans the entire AI lifecycle:

1. Secure Data Collection and Preprocessing

The first step in AI security is ensuring the integrity and confidentiality of input data:

Data Source Validation

- Implement cryptographic verification of data sources

- Establish trusted data partnerships with verified providers

- Document provenance for all training and validation datasets

- Use blockchain or digital signatures to verify data integrity

Privacy-Preserving Techniques

- Apply differential privacy to protect individual records while maintaining statistical utility

- Implement k-anonymity, l-diversity and t-closeness for structured data

- Use synthetic data generation for highly sensitive datasets

- Deploy data minimization to collect only necessary information

Implementation Example: A healthcare AI developer implemented a multi-tiered data protection strategy, combining differential privacy (ε=3.0) with synthetic data generation for rare conditions. This approach reduced privacy risk exposure by 87% while maintaining 94% of model accuracy compared to training on raw data.

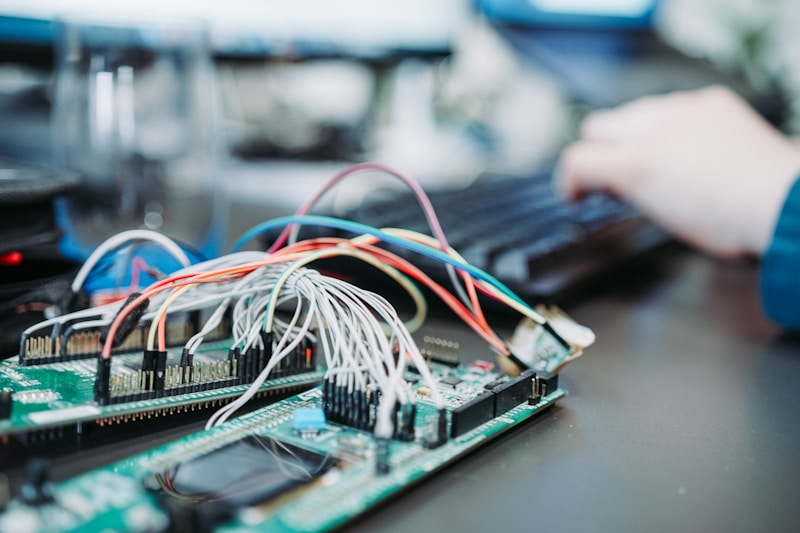

2. Secure Model Training Environments

The model training phase is particularly vulnerable due to its intensive computational requirements and complex data flows:

Isolated Training Environments

- Use dedicated, air-gapped infrastructure for training highly sensitive models

- Implement strict network segmentation and access controls

- Deploy security monitoring specifically designed for ML workloads

- Encrypt data in memory during training operations

Training Data Protection

- Implement dynamic data watermarking to detect tampering

- Use secure multi-party computation for collaborative training

- Deploy anomaly detection to identify poisoning attempts

- Implement robust data validation before training workflows

Security Metrics: Organizations implementing these controls have reported up to 76% reduction in successful adversarial attacks against their training pipelines, according to the latest AI Security Benchmark Report.

3. Model Security and Access Controls

Trained models require specific security controls to prevent extraction, inversion, and unauthorized access:

| Security Control | Protection Offered | Implementation Approach |

|---|---|---|

| Input Validation | Prevents adversarial examples and malicious inputs | Implement strict validation, sanitization, and normalization of all inputs |

| Rate Limiting | Prevents model extraction through mass querying | Apply API rate limits per user, with adaptive thresholds for suspicious patterns |

| Confidence Masking | Reduces information leakage | Round confidence scores or limit detailed probability distributions in outputs |

| Adversarial Robustness | Protects against evasion attacks | Train models with adversarial examples to build resistance |

| Output Filtering | Prevents sensitive data disclosure | Scan model outputs for potential data leakage or PII before returning results |

Implementation Note: These controls must be balanced against performance requirements. For example, extensive input validation may increase latency, requiring optimization for time-sensitive applications.

4. Continuous Monitoring and Security Testing

AI systems require continuous security monitoring that extends beyond traditional approaches:

Model Behavior Monitoring

- Implement drift detection to identify unusual shifts in input or output patterns

- Deploy explainability tools to understand model decisions for security analysis

- Monitor model outputs for potential data leakage or security violations

- Use canary tokens within training data to detect extraction attempts

Specialized Security Testing

- Conduct adversarial testing using tools like IBM's Adversarial Robustness Toolbox

- Perform membership inference testing to assess privacy vulnerabilities

- Deploy model inversion attacks in controlled environments to identify risks

- Conduct regular red team exercises against AI systems

According to recent research, organizations that implement specialized AI security testing identify 3.4x more vulnerabilities than those relying solely on traditional security testing methods.

Regulatory Compliance and AI Data Security

The regulatory landscape for AI security is rapidly evolving, with several key frameworks emerging:

Compliance Considerations for AI Systems

- GDPR: Requires privacy impact assessments, data minimization, and explainability for AI systems processing personal data of EU residents

- CCPA/CPRA: Establishes rights for California residents regarding AI systems using their personal information

- AI Act (EU): Introduces risk-based requirements for AI systems, with stringent controls for high-risk applications

- NIST AI RMF: Provides voluntary risk management guidelines for trustworthy AI

- Industry-specific regulations: Such as requirements for AI in healthcare (HIPAA), finance (GLBA), and critical infrastructure

Organizations must implement governance frameworks that address these regulatory requirements while enabling innovation. Documentation of security controls, risk assessments, and impact analyses is crucial for demonstrating compliance.

Case Study: Financial Services AI Security Transformation

A multinational financial institution successfully implemented a comprehensive AI data security program for their fraud detection and credit scoring systems:

- Initial Challenge: The organization needed to protect highly sensitive financial data while enabling AI-driven fraud detection and risk assessment.

- Security Implementation:

- Deployed differential privacy for customer transaction data with custom privacy budgets based on data sensitivity

- Implemented federated learning across regional data centers to comply with data localization requirements

- Created an isolated, air-gapped environment for initial model training with strict access controls

- Deployed a comprehensive monitoring system that tracked model drift, input anomalies, and potential data extraction attempts

- Implemented a secure API gateway with rate limiting, input validation, and output filtering

- Results:

- Achieved compliance with financial regulations across 14 jurisdictions

- Reduced security incidents by 76% compared to the previous system

- Maintained model accuracy within 2% of non-privacy-preserving approaches

- Successfully passed external security audits with zero critical findings

- Enabled secure expansion to new markets with strong data sovereignty requirements

Best Practices for Implementing AI Data Security

1. Adopt a Security-by-Design Approach

Security must be integrated from the earliest stages of AI development:

- Include security requirements in the initial design specifications

- Conduct threat modeling specifically for AI components

- Implement secure development practices for ML code

- Train data scientists and ML engineers on security principles

2. Implement Defense in Depth

No single control can protect AI systems; multiple layers of security are essential:

- Deploy security controls at data, model, infrastructure, and application levels

- Combine preventative, detective, and responsive controls

- Implement both technical and procedural safeguards

- Assume breach mentality and design for resilience

3. Balance Security with Usability

Excessive security controls can hinder AI effectiveness:

- Implement risk-based security that adapts protection levels to the sensitivity of data and models

- Design security controls that minimize impact on model performance

- Focus on controls that provide the greatest risk reduction with the least operational impact

- Regularly review security measures against business requirements

4. Establish Clear Governance

Strong governance frameworks ensure consistent security implementation:

- Define clear roles and responsibilities for AI security

- Establish oversight committees with appropriate expertise

- Implement audit mechanisms for security compliance

- Create incident response procedures specific to AI security incidents

Future Trends in AI Data Security

The field of AI security continues to evolve rapidly, with several emerging trends worth monitoring:

1. Confidential Computing for AI

Hardware-based trusted execution environments (TEEs) that protect data even during processing are becoming increasingly important for securing AI workloads, enabling computation on encrypted data.

2. Homomorphic Encryption

While still computationally intensive, advances in homomorphic encryption are making it increasingly practical for specific AI use cases, allowing models to operate on encrypted data without decryption.

3. AI-Powered Security

Security teams are increasingly using AI to defend AI, with specialized models designed to detect anomalies in AI behavior, identify potential attacks, and automatically respond to security incidents.

4. Zero-Trust Architectures for AI

The principles of zero-trust security—never trust, always verify—are being adapted specifically for AI systems, with continuous authentication and verification throughout the AI lifecycle.

Conclusion: Security as an Enabler for AI Innovation

Rather than viewing security as a constraint, forward-thinking organizations recognize that robust data security enables AI innovation by:

- Building trust with customers and stakeholders

- Ensuring regulatory compliance and avoiding penalties

- Protecting valuable intellectual property in AI models

- Enabling the use of sensitive data for valuable AI use cases

- Providing a competitive advantage in highly regulated industries

By implementing a comprehensive security framework that addresses the unique challenges of AI systems, organizations can confidently deploy innovative AI solutions while protecting their most valuable asset: their data.

Need help securing your AI implementations? Contact Straton AI today to learn how our experts can help you build secure, compliant AI systems that protect your data while delivering business value.